Training Machine Learning (ML) models can take a lot of time and consume a lot of computing power. This can be also true for testing the models if your datasets are large enough. These operations are resource-hungry and can paralyze computers for hours or days.

Training ML Models is Time Consuming

Building a machine learning model can be a time-consuming task. Sometimes, the model gets the desired results immediately, but often it requires rounds of (re)training and (re)testing. This is particularly the case when the training data is questionable. For example, when the training data is unbalanced, contains ambiguous cases or the available data points are not enough to infer an accurate prediction.

It is generally accepted that models trained with more data will perform better. Depending on the complexity of the model, training time can grow by a little or a lot. Regardless, training will always take over a computer forcing users to go do something else in the meantime.

The Challenge

We recently worked on a proof-of-concept that required us to develop a classification model. Initially, we were provided with about 1 million labelled samples. We trained the model with 700K samples and tested it with the remaining 300K. The model was quite unbalanced with only about 4% of positive cases. The model performed with spectacular accuracy (99.97%) but resulted in an unacceptable number of false negatives. None of this is surprising given how unbalanced the training dataset was. In the process of finding the right balance, we trained a few different models, used thresholds to control the rate of false negatives and re-retrained the model with a new dataset containing more accurate and cleaner cases. Regardless of this process, the point is that we had to train and re-train the model several times to get to an acceptable outcome.

The Machine and the Approach

As a startup, it is essential that we spend most of our time working, not waiting for computers to finish jobs. So we chose to take a server and do our training and testing in the cloud.

In our case, we chose IBM Cloud. The choice was an easy one: we love what IBM is doing for startups, their machines are just as fine as any other cloud provider’s and their tech support is as geeky and willing to help as you could hope for.

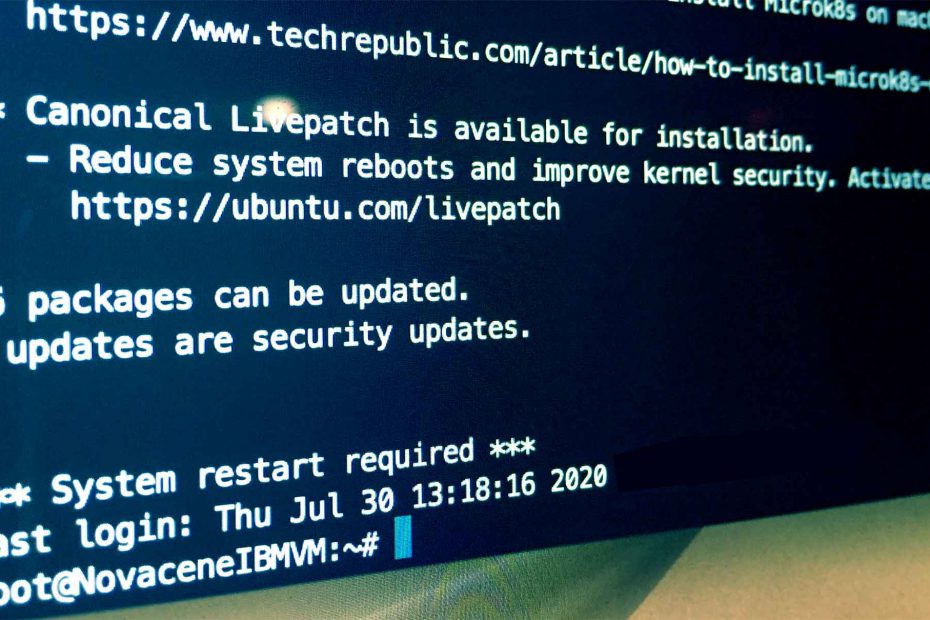

For this job, we set up a Linux VM with 16 vCPU and 64 GB RAM sitting close to us at a data centre in Toronto. The OS was Ubuntu 18.04-64 Minimal. IBM also offers GPUs, which can be useful depending on the type of model you are working with.

The first task was to develop the model locally and test it with a small dataset. Once we were confident that the code was running without issues, we copied it to the server. On the server, we installed Python and the required libraries. We copied the training dataset and set it to run. We kept checking in to make sure that the process was still running (it did crash a few times, so be prepared to be patient!) When the process finished, we discussed the test results with the client, made adjustments, re-trained and re-tested. We repeated the process until we arrived at a model that met the objectives.

Managing Server Cost

Thanks to per-hour billing, we were able to keep costs down by shutting down the machines when were done using them (big machines are expensive!) So, it is very important to remember to do this once you know you won’t be using the machine for a few days. Gotcha alert: save an image of your VM before shutting it down. We learned the hard way that machines sometimes need the OS to be reloaded when powered back on. This can wipe all your files and data so be sure to save an image.

Training machine learning models on the cloud is very easy, can save you a lot of time and free up developers to work on other things in the meantime.